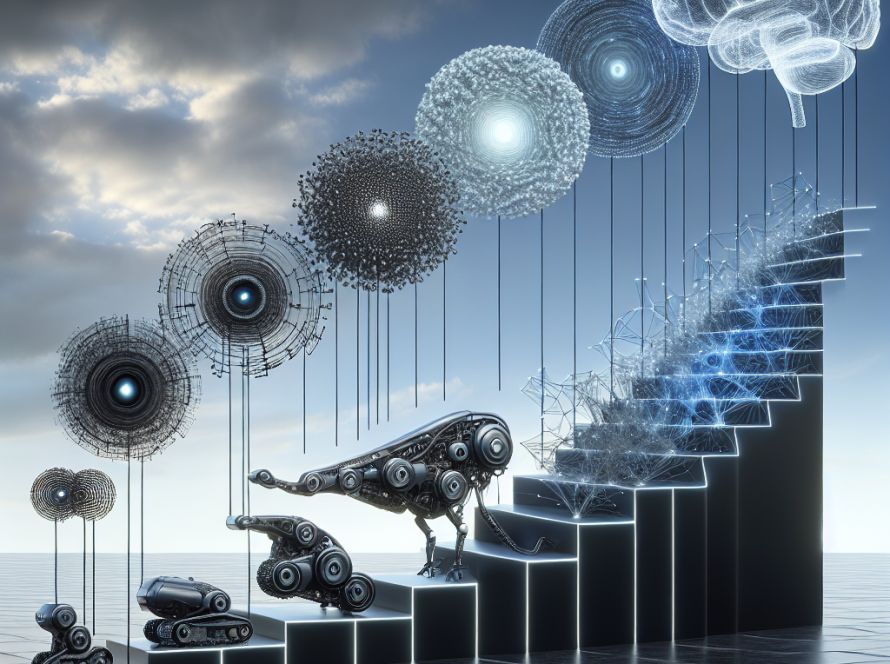

The exponential advancement of Multimodal Large Language Models (MLLMs) has triggered a transformation in numerous domains. Models like ChatGPT- that are predominantly constructed on Transformer networks billow with potential but are hindered by quadratic computational complexity which affects their efficiency. On the other hand, Language-Only Models (LLMs) lack adaptability due to their sole dependence on language interactions.

To address this limitation, researchers have been upgrading MLLMs by integrating multimodal processing capabilities. Visual Language Models (VLMs) such as GPT-4, LLaMAadapter, and LLaVA refine LLMs with visual understanding, equipping these models to handle diverse tasks like Visual Question Answering (VQA) and captioning. The focus remains on optimizing VLMs by tweaking baselines of the language model parameters while keeping the Transformer structure intact.

In a breakthrough development, researchers from Westlake University and Zhejiang University have designed Cobra, a MLLM which carries linear computational complexity. Cobra’s uniqueness lies in seamlessly integrating the Mamba language model into the visual modality. Researchers explored various fusion schemes to fine-tune Cobra’s multimodal integration. Cobra outshines current efficient methods like LLaVA-Phi and TinyLLaVA, hosting faster speed with competitive performance and accomplishing challenging prediction benchmarks. A significant implication of Cobra’s performance, matching that of LLaVA’s with significantly fewer parameters, indicates its efficiency.

Cobra, gearing up for the future, is planning to release its code as open source. This will facilitate the research fraternity in addressing complexity issues in MLLMs.

While LLMs like GLM and LLaMA aim to challenge InstructGPT, smaller alternatives like Stable LM and TinyLLaMA maintain efficacy. VLMs such as GPT4V and Flamingo extend LLMs, adapting to Transformer backbones, though this has scalability issues due to their quadratic complexity. Linear scalability solutions such as LLaVA-Phi, MobileVLM, Vision Transformers like ViT and Mamba provide competitively effective alternatives.

Cobra synergizes Mamba’s selective state space model (SSM) with visual understanding. It possesses a vision encoder, a projector, and the Mamba backbone. The vision encoder synergizes DINOv2 and SigLIP representations for improved visual understanding, whilst projector aligns visual and textual features, employing either a multi-layer perceptron (MLP) or a lightweight down-sample projector.

The paper concludes on a positive note specifying Cobra as the panacea to the efficiency problems that MLLMs under Transformer networks face. By marrying language models with linear computational complexity and multimodal inputs, Cobra optimizes the fusion of visual and linguistic information.

Cobra’s extensive experimentation emphasizes its computational efficiency and its competitive performance at par with advanced models like LLaVA. Specifically, in tasks related to visual hallucination mitigation and spatial relation judgment, Cobra excels. These advancements hold the promise of deploying superior AI models in scenarios demanding real-time visual information processing.