Researchers at MIT have discovered that computational models derived from machine learning are increasingly mimicking the function and structure of the human auditory system. This finding has significant implications for the design of more effective hearing aids, cochlear implants, and brain-machine interfaces. In the most extensive study to date of deep neural networks used for auditory tasks, the research team found that such models generate internal representations similar to those seen in the human brain when listening to the same sounds.

The researchers also discovered that the most effectual training method for these models involved auditory input that included background noise. This approach more closely replicated the activation patterns of the human auditory cortex.

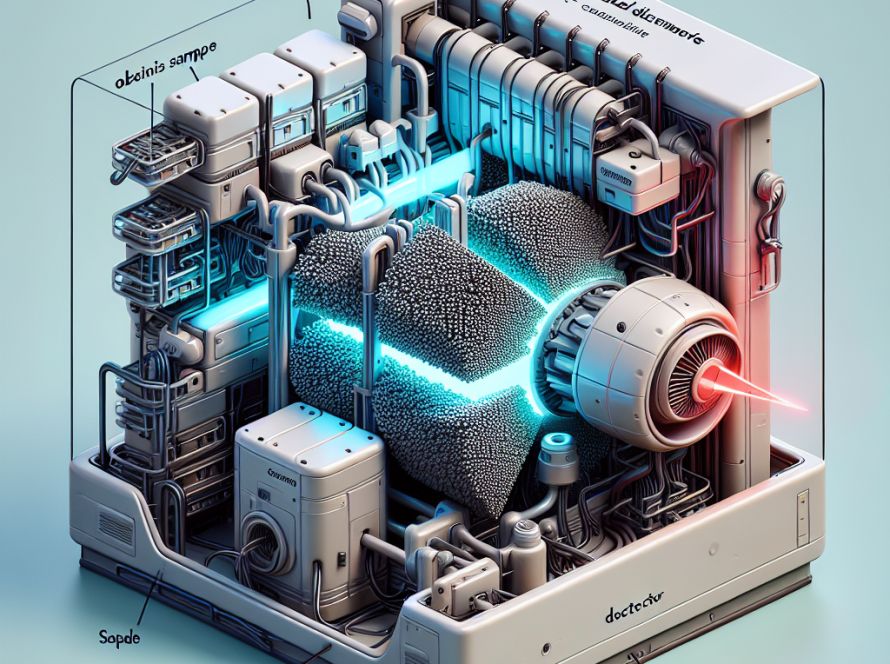

Deep neural networks comprise multiple layers of information-processing units that can be trained to perform specific tasks using vast volumes of data. These models have been increasingly used to help neuroscientists understand how the human brain performs certain tasks. During these tasks, the units in a neural network generate activation patterns in response to each audio input, such as a word or sound. These can be compared to the activation patterns seen in fMRI brain scans of people listening to the same input.

The current research builds upon a previous study in 2018, which found that models trained for auditory tasks showed representations very similar to those seen in the human brain. For the latest study, the team analyzed nine publicly available deep neural network models and created 14 models of their own. Several models were trained to perform single tasks, such as recognizing words or identifying the speaker, while a couple were trained to perform multiple tasks.

Upon presentation of natural sounds to the models, the internal representations of the models were found to resemble those of the human brain. Models that had been trained on multiple tasks and had been exposed to background noise during training were found to be particularly similar to the human brain.

The research also supports the idea of a hierarchical organization within the human auditory cortex, suggesting that processing occurs in stages supporting distinct computational functions. In the future, the lab plans on using these findings to develop more effective models that can accurately replicate human brain responses, potentially aiding in the development of better hearing aids and other vital applications.