A new study from MIT suggests that computational models rooted in machine learning are moving closer to simulating the structure and function of the human auditory system. Such technology could improve the development of hearing aids, cochlear implants, and brain-machine interfaces. The study analyzed deep neural networks trained for auditory tasks, finding similarities with the human brain when processing the same sounds.

The research also provided insights into the optimal ways to train these models, with models trained on auditory input featuring background noise showing the closest match to the human auditory cortex’s activation patterns. This suggests that these models perform better when they’re exposed to a more realistic hearing environment.

The study was comprehensive in its examination of deep neural networks, the largest of its kind so far. It concluded that machine-learning-derived models are a promising step towards accurately modeling the brain. MIT’s team also noted that these models can help mediate behaviors on a scale that previously implemented models couldn’t achieve.

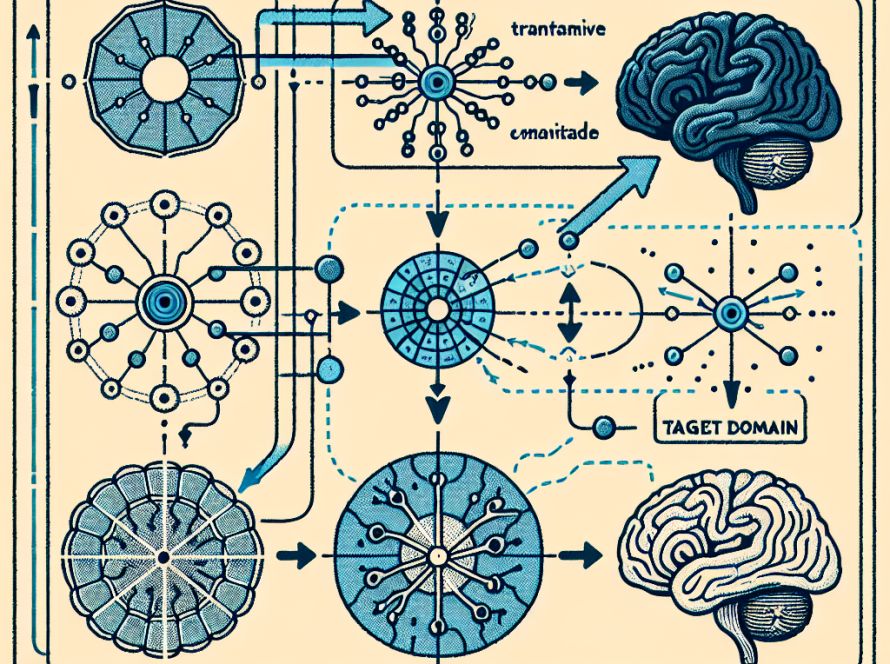

In 2018, Josh McDermott, an associate professor of brain and cognitive sciences at MIT and the senior author of the current study, and then-graduate student Alexander Kell documented that internal representations generated by a neural network trained for auditory tasks showed similarity to fMRI scans of human brains processing the same sounds. This latest study built upon these findings to assess a broader set of models, determining whether this capacity to approximate human neural representations is a common trait.

The team tested nine publicly available deep neural network models, alongside 14 of their own, and found similar patterns to those of the human brain when processing audio stimuli. The most accurate representations matched those models which had been trained to perform multiple tasks and had been trained with auditory input involving background noise.

A secondary discovery from the study was the possible hierarchical organization within the human auditory cortex. This notion proposes that processing is divided into stages supporting different computational functions. The models reflected this notion: earlier stages of the model closely resembled the primary auditory cortex, whereas later stages of the model matched regions beyond the primary cortex.

This research could facilitate the creation of more accurate models that reproduce human brain responses, offering further understanding about brain organization. This, in turn, could contribute to the development of advanced hearing aids, cochlear implants, and brain-machine interfaces. The aim is to create a computer model capable of predicting brain responses and behaviors, opening up a new range of possibilities.