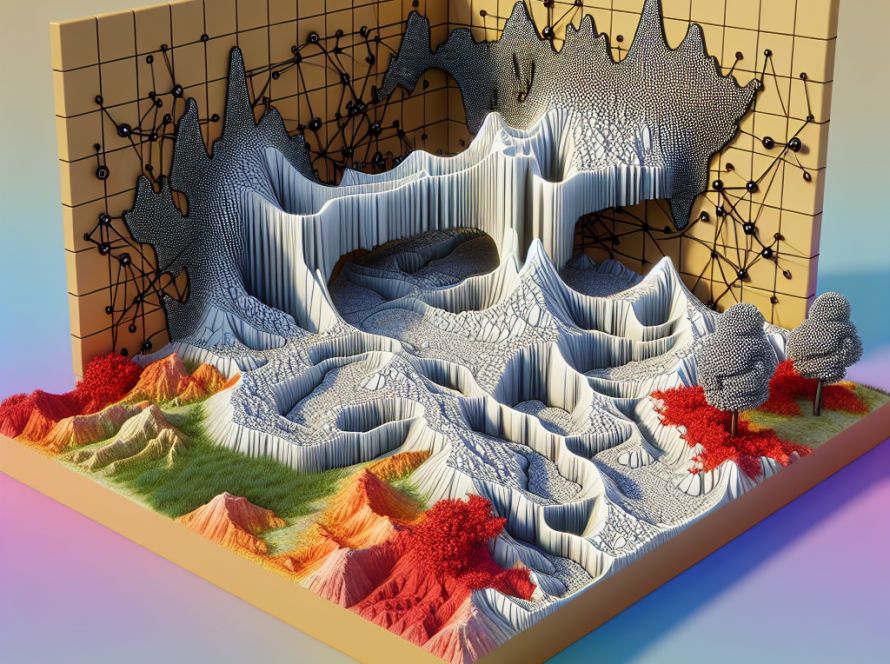

Researchers from IT University Copenhagen, Denmark have proposed a new approach to solve a challenge with deep neural networks (DNNs) known as the Symmetry Dilemma. This issue arises because standard DNNs have a fixed structure tied to specific dimensions of input and output space. This rigid structure makes it difficult to optimize these networks across various domains with different dimensions. The newly proposed model, known as Structurally Flexible Neural Networks (SFNNs), aims to address this limitation.

One common method of addressing this issue, Indirect Encoding, optimizes mechanisms of plasticity (the ability to change in response to input) instead of directly optimizing weight values. Another approach is through Graph Neural Networks (GNNs), which is specifically designed for analyzing data represented as a graph.

The SFNNs proposed by the researchers consist of Gated Recurrent Units (GRUs) and linear layers functioning as neurons. The GRUs serve as rules for synaptic plasticity, which means they control how the synapses (connections between neurons in the network) change over time. One of the main advantages of SFNNs is that they don’t just optimize individual units in the network, but also shared parameters. This allows the SFNNs to show a wider variety of representations when in deployment.

The ability of this model to adapt quickly to new environments is demonstrated through a series of experiments. It shows that a single set of parameters can be used to solve tasks with different input and output sizes. During these tasks, the plastic synapses and neurons evolve and reorganize to improve performance.

One distinctive feature of SFNNs is the way they handle permutations of input and output. The model can reorder the inputs and outputs at the beginning of each lifetime, and still improve its performance over time. This ability to adapt to different configurations of data makes SFNNs a robust solution for varying environments.

However, the researchers acknowledged some limitations of their study. One major drawback is if the input and output sizes used in the environment increase, the symmetry dilemma becomes magnified, making the network’s learning more challenging.

Despite its limitations, SFNNs is showing prowess when it comes for flexible, dynamic learning across various environments with different input and output dimensions. This allows for rapid improvements and provides robustness to the network. The results of the study are available for review in a published paper by the researchers.

The researchers are optimistic that their work with SFNNs represents an innovative approach to handling the symmetry dilemma in neural network optimization. This could open new avenues in AI research and development, with potential for widespread applications in fields that require adaptable, learning networks.