As you walk down a buzzing city street, the hum of a passing object draws your attention. It’s a small, automated delivery robot navigating quickly and nimbly among pedestrians and urban obstacles. It’s not a scene from a science fiction film, but a demonstration of the innovative technology called Generalizable Neural Feature Fields (GeFF). This breakthrough method potentially reshapes how robots perceive and interact with their complex surroundings, something even the most sophisticated robots have historically struggled with.

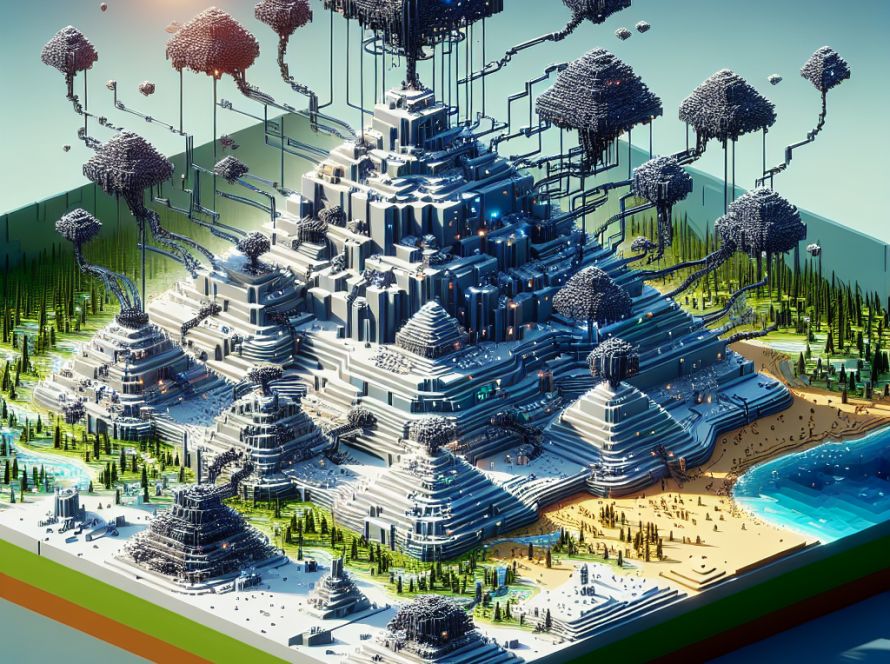

Unconventionally, instead of utilizing traditional sensors for gathering raw data from its environment, GeFF relies on advanced neural networks. This system analyzes rich 3D scenes captured through RGB-D cameras and encodes both geometrical and semantic information into one unified image. But the process doesn’t stop there. GeFF integrates this spatial representation with human-like interpretations, enabling the robot to intuitively comprehend its surroundings. This allows the robot to identify and interact with specific objects or scenes, such as a cluttered living room, in the same way a human would.

Robots equipped with GeFF can navigate unfamiliar settings similarly to humans, interpreting rich visual and linguistic cues to understand their environment and plan their movement efficiently. They can promptly detect and circumvent obstacles or spontaneously adjust their path in relation to spatial impediments, such as an upcoming crowd. Astonishingly, these robots can even interact with objects they’ve never encountered before, and comprehend them in real-time.

This advanced technology isn’t reserved for the future; it’s already being implemented and tested in robotic systems operating in real-world environments, such as university labs, corporate offices, and homes. Tasks assigned to these robots include avoiding dynamic obstacles, locating and retrieving objects based on voice commands, and performing complex navigation and manipulation tasks.

Despite the breakthroughs, the technology is still in an early stage with significant scope for further refinement. There is a need to prep the system for extreme conditions and exceptional cases, and the neural representations that drive GeFF’s perception require further optimization. Integration of GeFF’s advanced planning with lower-level robotic control systems continues to be a complex challenge.

Despite the challenges, GeFF stands as an unprecedented advancement in robotics. It gives us a glimpse into a new era of autonomous robots capable of deeply interpreting and responsively interacting with their environment. GeFF leads the innovation in robotics, providing a potent framework for scene-level perception and action. Incorporating the capacity to generalize across scenes, infuse semantic understanding and function in real-time, GeFF opens the way for future autonomous robots to navigate and manipulate their environments with unparalleled sophistication and adaptability.

As GeFF continues to evolve, it is set to play a fundamental role in shaping the future of robotics, bringing us closer to a world where robots operate autonomously and naturally in harmony with humans.