Researchers from the University of Minnesota have developed a new method to strengthen the performance of large language models (LLMs) in knowledge graph question-answering (KGQA) tasks. The new approach, GNN-RAG, incorporates Graph Neural Networks (GNNs) to enable retrieval-augmented generation (RAG), which enhances the LLMs’ ability to answer questions accurately.

LLMs have notable natural language understanding capabilities, thanks to their pre-training on an extensive range of text data. However, their adaptation to new or specialized knowledge is limited, leading to possible inaccuracies. Knowledge Graphs (KGs) offer structured data storage, which helps in updates and enables tasks like KGQA. RAG frameworks can improve the LLMs by integrating information from the KGs, a crucial factor in providing accurate responses in KGQA tasks.

The KGQA methods are often sorted into Semantic Parsing (SP) and Information Retrieval (IR) categories. The SP approach converts questions into logical queries, answered by executing them over KGs, but dependency on annotated queries could lead to non-executable ones. On the other hand, IR methods operate in weakly-supervised settings, retrieving KG information for question answering without explicit query annotations. The researchers found that GNNs combined with RAG could enhance KGQA, even outperforming existing methods by utilizing the GNNs for retrieval and RAG for reasoning.

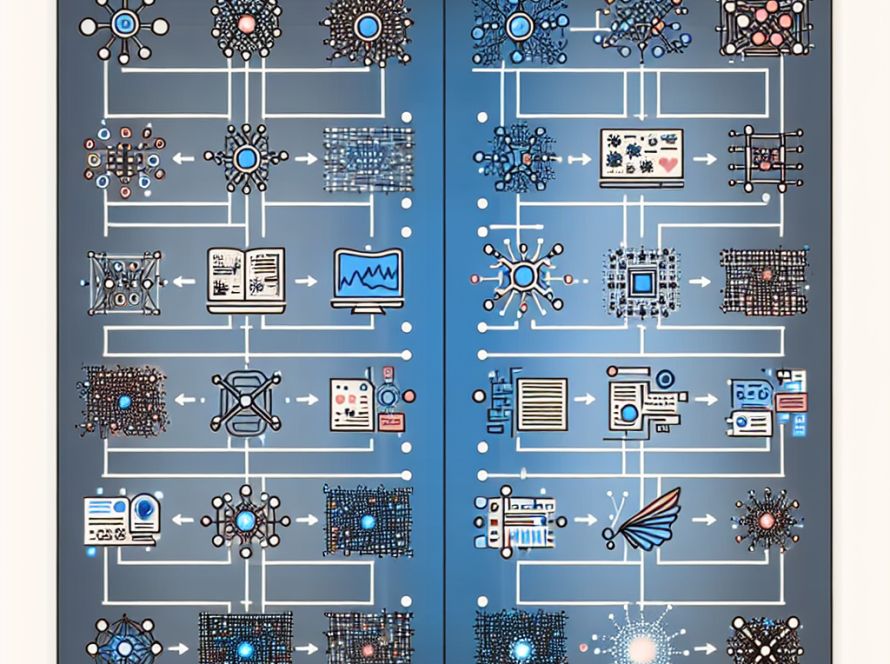

The GNN-RAG method employs GNNs for retrieval by reasoning over dense KG subgraphs to identify potential answers. Then, it extracts the shortest paths connecting question entities and answers derived from GNN, converts these paths into text, feeding them into LLM reasoning via RAG. The system can also incorporate LLM-based retrievers to further increase performance of the KGQA.

As per their results, the GNN-RAG framework has shown promising output compared to other methods. It especially shines in handling complex graph structures and multi-hop or multi-entity questions. Moreover, the unique method of combining LLM and GNN-based retrievals provides better answer diversity and recall. Furthermore, GNN-RAG has demonstrated its capability to enhance the performance of all kinds of LLMs, and even to boost weaker models by significant margins.

GNN-RAG provides several key contributions. First, it uses GNNs for retrieval, enhancing LLM reasoning, and retrieval analysis informs a retrieval augmentation technique, which improves GNN-RAG’s efficacy. Second, GNN-RAG has achieved state-of-the-art performance on WebQSP and CWQ benchmarks and demonstrated its effectiveness in retrieving multi-hop information, crucial for faithful LLM reasoning. Finally, it enhances the KGQA performance of vanilla LLMs without any extra computational cost, matching or even surpassing GPT-4 with a 7B tuned LLM. As such, GNN-RAG proves to be a versatile and efficient approach for enhancing KGQA across diverse scenarios and LLM architectures.