Google Research has recently launched FAX, a high-tech software library, in an effort to improve federated learning computations. The software, built on JavaScript, has been designed with multiple functionalities. These include large-scale, distributed federated calculations along with diverse applications including data center and cross-device provisions. Thanks to the JAX sharding feature, FAX facilitates smooth integration with Tensor Processing Units (TPUs) and more complex JAX runtimes such as Pathways.

The novel FAX library offers several benefits notably by integrating essential building blocks for federated computations as primary elements inside JAX. The software is characterized by its scalability, straightforward JIT compilation, and AD features. FAX’s relevance is seen in federated learning (FL) where clients work together on machine learning tasks without the risk of their personal data being compromised. These federated computations frequently involve a high number of client training models with regular periodic synchronization.

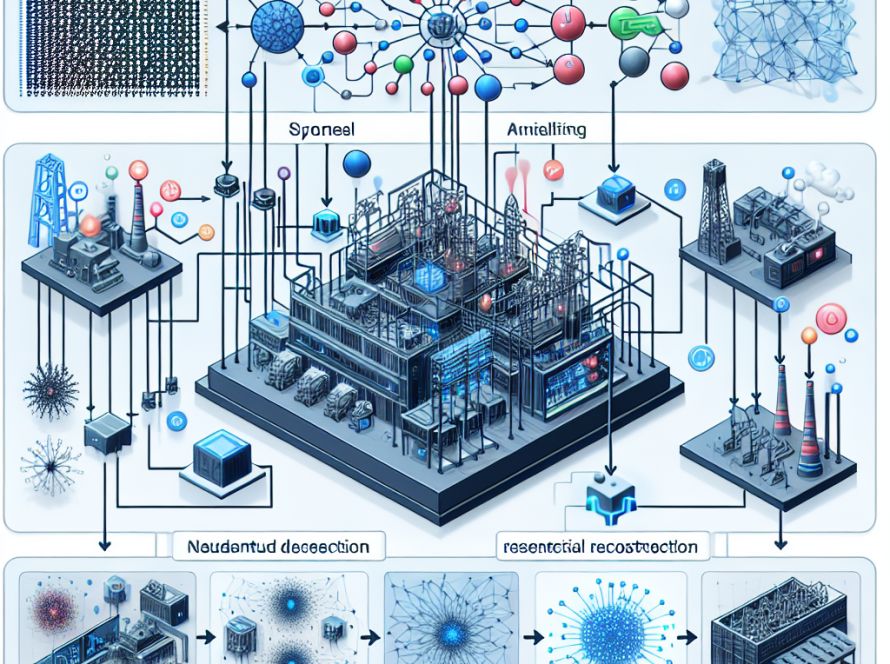

Although federated learning applications can use on-device clients, sophisticated data center software is still critical. FAX solves this challenge by providing a framework that can define scalable distributed and federated computations in data centers. The library can shard computations across models and clients, as well as split within-client data across physical and logical device networks using innovations in distributed data training, like Pathways and GSPMD.

The Google team have confirmed FAX’s ability to support Federated Automatic Differentiation (federated AD) by enabling forward and reverse mode differentiation via the JAX Primitive mechanism. This function also helps preserve data location information during the differentiation process.

Among the primary contributions of FAX, the team highlights an efficient translation of FAX computations into the XLA HLO (XLA High-Level Optimizer) format. By leveraging this domain-specific compiler feature of XLA HLO, FAX can effectively use hardware accelerators including TPUs, leading to improved performance and efficiency.

Moreover, the library encompasses a detailed implementation of federated automated differentiation, which automates the gradient computation process in a complex federated learning setup. This not only simplifies the expression of federated computations but also accelerates the automatic differentiation process, which is a vital aspect of training machine learning models.

Finally, FAX has been developed to be compatible with existing cross-device federated computing systems. As a result, calculations developed with FAX, whether they incorporate data center servers or on-device clients, can be efficiently deployed and executed in real-world federated learning environments.

In summary, FAX is a versatile tool. It can be utilized for various machine learning computations in data centers and beyond federated learning. It can support a variety of distributed and parallel algorithms, such as FedAvg, FedOpt, branch-train-merge, DiLoCo, and PAPA. The team encourages interested parties to further explore their research paper and GitHub, and to join their online communities for updates and discussions.