As artificial intelligence (AI) evolves, the risk of misuse in critical fields such as autonomy, cybersecurity, biosecurity, and machine learning increases. Google DeepMind has introduced the Frontier Safety Framework to counter these threats posed by advanced AI models, which may develop potentially harmful capabilities.

Current AI safety protocols primarily deal with existing AI system risks through ways like alignment research, which aligns AI models to human values, or incorporating responsible AI practices to control immediate threats. These methods often react to current risks and overlook potential future hazards with advanced AI. The Frontier Safety Framework differs by being a proactive protocol set to anticipate and neutralize such looming risks. The framework is adaptable and expected to progress with increased understanding of AI hazards. It focuses on the significant risks arising from powerful capabilities like exceptional agency or intricate cyber capabilities at the model level.

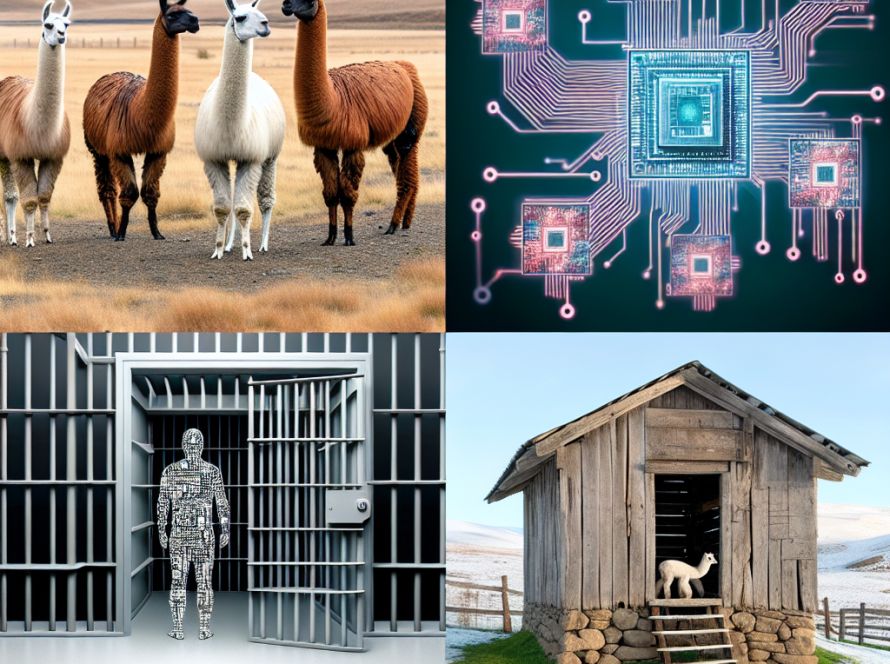

The Frontier Safety Framework has three safety stages for dealing with the risks of future sophisticated AI models:

1. Identifying Critical Capability Levels (CCLs) – It involves researching the potential harm scenarios in high-risk domains and establishing the minimum model capability required to inflict such harm. Pinpointing these CCLs facilitates researchers to center their evaluation and mitigation efforts on the gravest threats.

2. Evaluating Models for CCLs – Under the Framework, the creation of “early warning evaluations” is included. These model evaluations are designed to signal when a model is nearing a CCL, offering advance notice prior to a model attaining a hazardous capability limit. This anticipatory monitoring enables timely interventions.

3. Applying Mitigation Plans – A mitigation plan is implemented once a model passes the early warning evaluations and hits a CCL. The plan considers the overall balance of benefits and risks, and the intended deployment contexts. Measures focus on security (preventing model data breaches) and deployment (avoiding misuse of crucial capabilities).

The Framework initially targets four risk domains – autonomy, biosecurity, cybersecurity, and machine learning R&D. The primary objective is to assess how potential threat actors might use advanced capabilities to inflict harm.

In summary, the Frontier Safety Framework suggests a novel and forward-thinking strategy to AI safety, transitioning from reactive to active risk management. The Framework combats not just current threats, but also potential future risks presented by advanced AI capabilities. By defining Critical Capability Levels, evaluating models for these levels, and implementing fitting mitigation plans, the Framework strives to forestall severe harm from advanced AI models while maintaining a balance for innovation and access.