Multimodal Large Language Models (MLLMs), such as Flamingo, BLIP-2, LLaVA, and MiniGPT-4, enable emergent vision-language capabilities. Their limitation, however, lies in their inability to effectively recognize and understand intricate details in high-resolution images. To address this, scientists have developed InfiMM-HD, a new architecture specifically designed for processing images of varying resolutions at a lower computational cost.

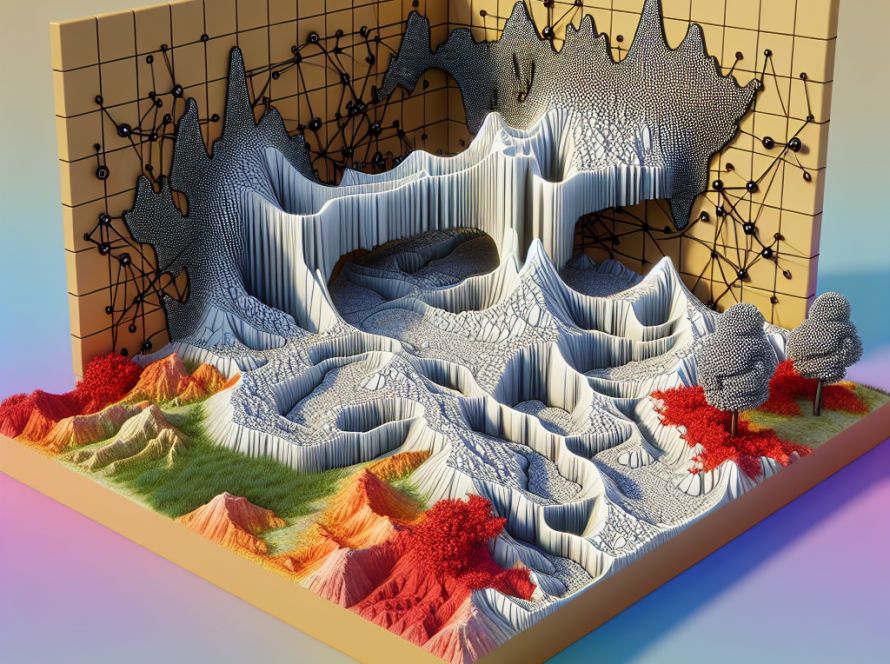

The InfiMM-HD architecture consists of three main elements: the Large Language Model (LLM), the Gated Cross Attention Module, and the Vision Transformer Encoder. The LLM handles linguistic processing, while the Gated Cross Attention Module integrates visual data with linguistic tokens. This Attention Module is strategically positioned every four layers within the Large Language Model’s decoder layers to ensure optimal assimilation of the visual information. Lastly, the Vision Transformer Encoder processes the visual data.

The innovation in InfiMM-HD lies in how these modules work together through a unique four-step training pipeline. This process allows the model to effectively respond to the challenges brought about by high-resolution images in a computationally efficient manner. Furthermore, InfiMM-HD uses a cross-attention approach, allowing it to perform exceptionally well across diverse criteria.

The cross-attention approach in the InfiMM-HD model proves beneficial when processing high-resolution inputs. However, the model is not without its limitations, specifically in the realm of text comprehension. Building on the initial success of InfiMM-HD, ongoing research focuses on refining modal alignment methods and expanding data sets to further enhance overall performance.

Despite potential drawbacks such as susceptibility to generating incorrect information or misconceptions, the ethical usage of InfiMM-HD and similar technologies is under continuous evaluation. The priority lies in detecting potential biases and actively addressing them to facilitate ethical deployment of these technologies. Decision-makers must remain aware of these areas of concern and incorporate ethical considerations to prevent unforeseen difficulties as Artificial Intelligence and MLLMs continue to evolve.

In conclusion, InfiMM-HD presents a groundbreaking approach in the world of MLLMs, merging performance enhancement and computational efficiency while processing high-resolution visuals. Despite any current limitations or challenges, its unique capabilities in handling high-resolution images symbolize a significant advancement in the field.