Artificial Intelligence (AI) advancements, particularly in Large Language Models (LLMs), have shown significant progress in language production, expanding into sectors such as healthcare, finance, and education. However, these models’ size development has also increased inference latency, forming a bottleneck in LLM inference and complicating their application in real-world scenarios.

Efforts to solve these limitations have included decreasing the decoding steps and amplifying the decoding process’s arithmetic intensity, using smaller draft models for speculative decoding. The adoption of these draft models into distributed systems has presented challenges.

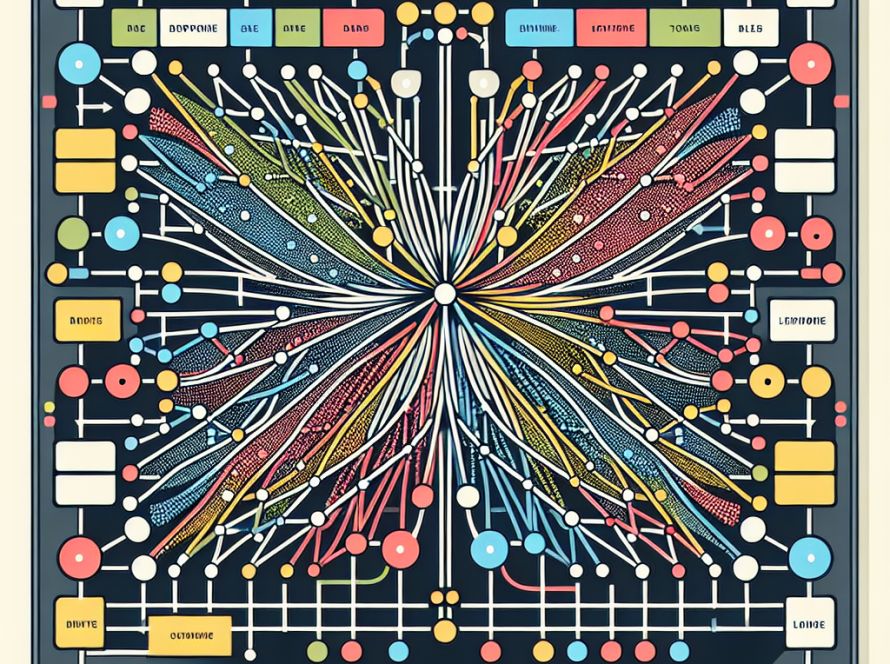

Addressing these issues, a recent study introduced MEDUSA, an efficient approach that improves LLM inference, incorporates additional decoding heads to predict multiple tokens concurrently, and utilizes the backbone model’s decoding heads to expedite inference. Notably, unlike speculative decoding, MEDUSA does not need a separate draft model; it can be integrated easily into existing LLM systems, even under dispersed conditions, by leveraging parallel processing.

The team behind MEDUSA revealed two novel insights: MEDUSA heads have been used to generate multiple candidate continuations verified simultaneously, and an acceptance method has been applied to select suitable candidates. They also proposed two approaches for fine-tuning LLMs’ predictive MEDUSA heads.

The first method, dubbed MEDUSA-1, allows lossless inference acceleration by directly fine-tuning MEDUSA atop a frozen backbone model. MEDUSA-1 is suitable for integrating MEDUSA into an existing model or in resource-restrained environments. It uses less memory and offers greater efficiency through quantization techniques.

In contrast, MEDUSA-2 adjusts MEDUSA and the core LLM at the same time. It requires a unique training process, provides faster speed, and boasts improved prediction accuracy for MEDUSA heads. MEDUSA-2 is suitable for environments where resources are abundant as it allows the concurrent training of MEDUSA heads and the backbone model without sacrificing output quality or next-token prediction ability.

Various enhancements to MEDUSA were proposed, including a regular acceptance scheme to boost the acceptance rate without compensating generation quality, and a self-distillation method in the absence of training data. The evaluation process of MEDUSA involved models of different sizes and training protocols. Results indicated that MEDUSA-1 can accelerate data more than two times without compromising generation quality, increasing acceleration rates to 2.3-3.6x when using MEDUSA-2.

The research, its full paper, and related work can be found on Github, with all credits due to the project’s researchers.