The field of large language models (LLMs) is developing at a rapid pace due to the need to process extensive text inputs and deliver accurate, efficient responses. Open-access LLMs and proprietary models like GPT-4-Turbo must handle substantial amounts of information that often exceed a single prompt’s limitations. This is key for tasks like document summarisation, conversational question answering, and information retrieval.

However, there’s still a significant performance gap between open-access LLMs and proprietary models. Open source models like Llama-3-70B-Instruct and QWen2-72B-Instruct are enhancing their abilities, yet fall short when it comes to processing large quantities of text and retrieval. These issues are particularly evident in real-world applications where addressing long-context inputs and efficient retrieval is crucial.

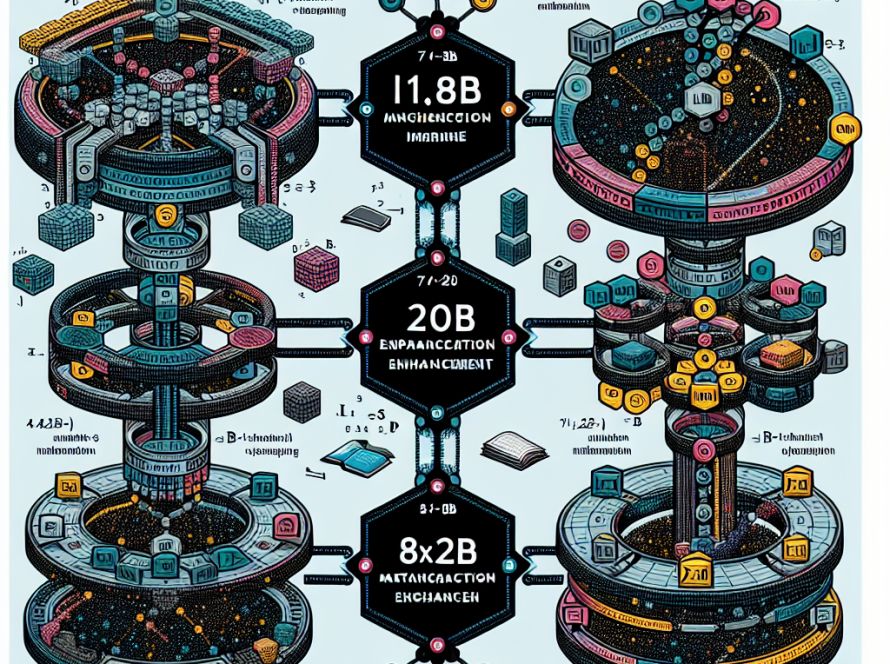

To tackle these challenges, researchers from Nvidia have introduced ChatQA 2, a model based on Llama3 designed to enhance instruction-following, retrieval-augmented generation (RAG) performance, and long-context understanding. The model achieves this by extending the context window to 128K tokens and using a three-step instruction tuning process. This context window is extended through continuous pretraining on a mix of datasets resulting in 10 billion tokens with a sequence length of 128K.

The creation of ChatQA 2 starts with expanding Llama3-70B’s context window from 8K to 128K tokens through continual pretraining with a mix of datasets and then applies a three-stage tuning process. This applies training on high-quality instruction-following datasets, conversational QA data with provided context, and focuses on long-context sequences of up to 128K tokens.

As a result, ChatQA 2 achieves comparable accuracy to GPT-4-Turbo on many long-context understanding tasks and surpasses it in RAG benchmarks. The model also does well in medium-long context benchmarks within 32K tokens and short-context tasks within 4K tokens. The model also addresses significant RAG pipeline problems such as context fragmentation and low recall rates, greatly enhancing the model’s efficiency for query-based tasks.

In essence, ChatQA 2 is a significant advancement in large language models that delivers enhanced capabilities for processing and retrieving information from massive text inputs. The model’s development and evaluation represent a crucial forward step for the field, and it offers robust solutions for a variety of tasks, balancing accuracy and efficiency through advanced long-context and retrieval-augmented generation techniques.