Artificial Intelligence (AI) and complex neural networks are growing rapidly, necessitating efficient hardware to handle power and resource constraints. One potential solution is In-memory computing (IMC) which focuses on developing efficient devices and architectures that can optimize algorithms, circuits, and devices. The explosion of data from the Internet of Things (IoT) has propelled this need for advanced AI processing capabilities.

Numerous organizations such as King Abdullah University of Science and Technology, Rain Neuromorphics, and IBM Research are pioneering hardware-aware neural architecture search (HW-NAS) to design efficient neural networks for IMC hardware. It considers the specific features and constraints of IMC hardware to optimize the deployment of neural networks while jointly boosting hardware and software performance. This approach has its bottlenecks like the need for a unified framework and benchmarks for different neural network models and IMC architectures.

Unlike conventional neural architecture search, HW-NAS automates the optimization of neural networks within hardware constraints like energy, latency, and memory size by integrating hardware parameters. Frameworks developed in recent years allow for the simultaneous optimization of neural network and IMC hardware parameters. However, the peculiarities associated with IMC hardware often go unnoticed in traditional NAS surveys, hence the need for a more focused review on HW-NAS processes for IMC.

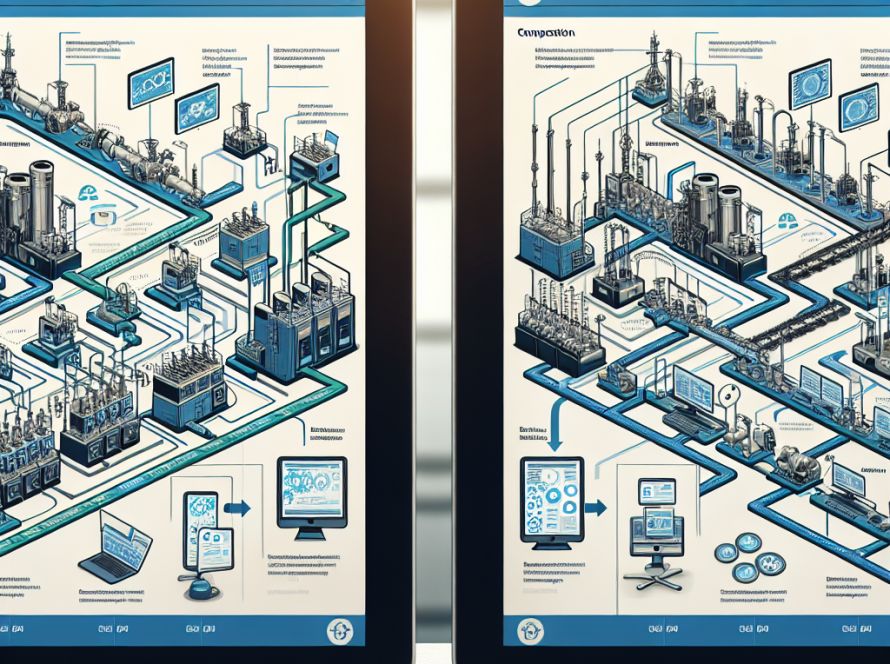

Traditional von Neumann architectures suffer from high energy costs from transferring data between memory and computing units, a challenge that IMC addresses by processing data within the memory, thus reducing data movement costs and increasing latency and energy efficiency. Variations of memory types such as SRAM, RRAM, and PCM are used in crossbar arrays to efficiently execute operations in IMC systems. A crucial part of this process is the optimization of design parameters across devices, circuits, and architectures, which HW-NAS exploits to balance performance, computation demands, and scalability.

HW-NAS for IMC incorporates four deep learning techniques: model compression, neural network model search, hyperparameter search, and hardware optimization. These explore design spaces to find optimal combinations of neural network and hardware configurations. The search space covers neural network operations and hardware design, aiming for efficient performance within given hardware constraints.

Despite the strides made with HW-NAS techniques for IMC, several challenges persist. One such problem is the lack of a unified framework that combines neural network design, hardware parameters, pruning, and quantization. Benchmarking problems arise due to inconsistent comparisons across various HW-NAS methods. The existing frameworks often focus on convolutional neural networks, sidelining other models like transformers or graph networks. Adaptation is also often needed for non-standard IMC architectures. Future research needs to look at creating frameworks that optimize software and hardware levels, support a diverse range of neural networks, and enhance data and mapping efficiency. Integrating HW-NAS with other optimization techniques is crucial for effective design of IMC hardware.