Machine learning frameworks’ integration with various hardware architectures has proven to be a complicated and time-consuming process, primarily due to the lack of standardized interfaces, which frequently results in compatibility problems and impedes the adoption of new hardware technologies. It usually requires developers to write specific code for each piece of hardware, with communication costs and scalability issues presenting obstacles to using hardware resources for machine learning tasks without issues.

Current methods to combine machine learning frameworks with hardware generally involve writing device-specific code or depending on middleware solutions like gRPC for communication between the frameworks and the hardware. These approaches, however, can be burdensome and may create overhead, thereby limiting performance and scalability. The Google Dev Team’s proposed solution to this challenge is the PJRT Plugin (Platform Independent Runtime and Compiler Interface), which operates as an intermediary layer between machine learning frameworks like TensorFlow, JAX, and PyTorch, and underlying hardware like TPU, GPU, and CPU. By offering a standardized interface, PJRT simplifies integration, encourages hardware agnosticism, and enables quicker development cycles.

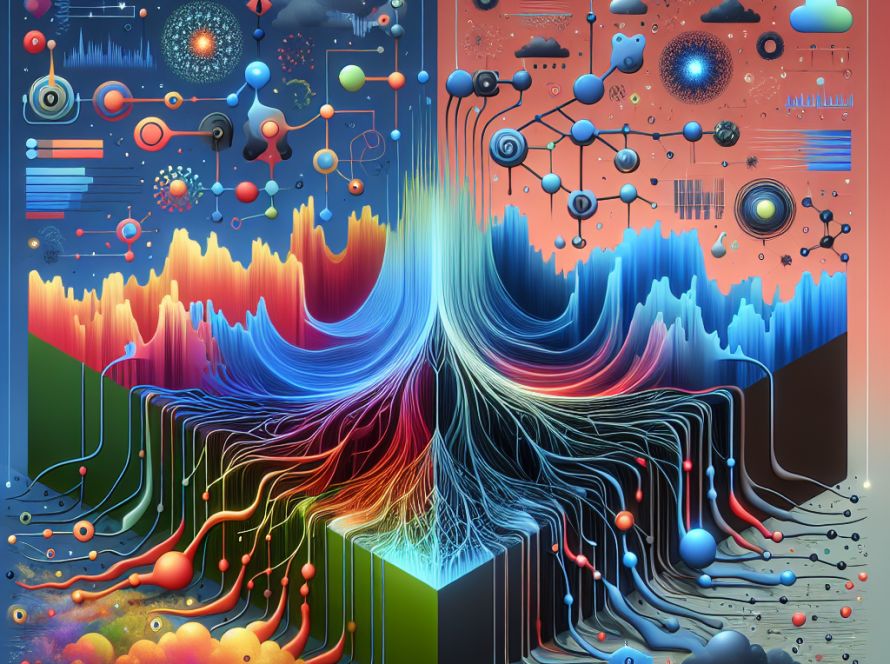

The architecture of PJRT is centered around offering an abstraction layer that bridges machine learning frameworks and hardware. This layer transforms the operations of the framework into a format the underlying hardware can understand, allowing for flawless communication and execution. PJRT was designed to be toolchain-independent, ensuring it is flexible and adaptable to various development environments. PJRT facilitates direct device access by avoiding the need for an intermediate server process, leading to more rapid and effective data transfer.

Being open-source, PJRT promotes contributions from the wider community and broader adoption, thereby encouraging innovation in the integration of machine learning hardware and software. It has been shown that PJRT considerably enhances the performance of machine learning workloads, especially when used with TPUs. By eliminating overhead and supporting larger models, PJRT can better training times, scalability, and overall efficiency. Currently, PJRT is being utilized by an expanding array of hardware, including Apple silicon, Google Cloud TPU, NVIDIA GPU, and Intel Max GPU.

To conclude, PJRT addresses the challenges associated with integrating various hardware architectures with machine learning frameworks by offering a standardized, toolchain-independent interface. This accelerates the integration process and allows for hardware agnosticism, paving the way for wider hardware compatibility and faster development cycles. Additionally, with its efficient architecture and direct device access, PJRT significantly enhances performance, particularly in the context of machine learning workloads involving TPUs.