Text, audio, and code sequences depend on position information to decipher meaning. Large language models (LLMs) such as the Transformer architecture do not inherently contain order information and regard sequences as sets. The concept of Position Encoding (PE) is used here, assigning a unique vector to each position. This approach is crucial for LLMs to achieve better understanding. There are various PE methods, including absolute and relative types, and these are key components in LLMs that adapt to different tokenization techniques. However, discrepancies in the tokenization process can cause difficulties in precise position addressing in sequences.

Initially, attention mechanisms did not need the PE component as they were used together with Recurrent Neural Networks (RNNs). However, the introduction of Memory Network employed PE along with attention by using learnable vectors for relative positions. PE became more popular with the Transformer architecture which explored both absolute and relative PE types. This led to several enhancements such as efficient bias terms and Contextual Position Encoding (CoPE), a system that provides context to position measurement.

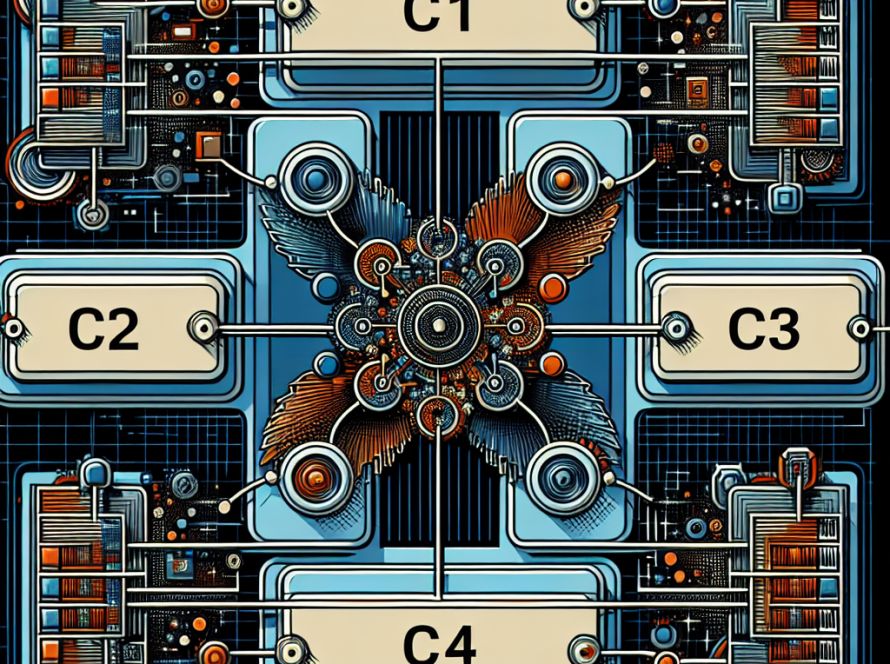

Meta researchers introduced CoPE, a mechanism that identifies token positions according to context vectors. CoPE is capable of setting up fractional positional values that need embedding interpolation for computation. These embeddings are crucial for refining the attention operation by integrating positional data. Reports indicate that CoPE performs impressively in tasks such as counting and selective copying. It surpasses other token-based PE techniques, especially in out-of-domain contexts.

CoPE’s position measurement technique is context-dependent. This innovative system is computed for each query-key pair, enabling differentiation via backpropagation. Positional values are computed by adding up the gate values between the target and current tokens. CoPE extends the possibilities for relative PE by incorporating various positional concepts beyond token counts. While the values produced by CoPE can be fractional, standard position encodings occasionally fail in tasks that call for precise counting. This necessitates the use of advanced techniques like CoPE.

Among all the PE methods compared, absolute PE performed the worst. CoPE outperformed relative PE and showed further improvement when used in combination with relative PE. CoPE’s superiority was evident through its performance in general language tasks and comparing its performance in code datasets. The result showed an improvement of 17% over Absolute PE and 5% over RoPE.

The paper introduces CoPE, a sturdy method for position encoding that measures it contextually, deviating from the token-based approach. This method offers more flexibility in positional addressing and has shown improvement across various tasks in both text and code domains. Future scope of CoPE extends to areas such as video and audio where token position may not be as suitable, and larger models could be trained using CoPE, further evaluating their downstream task performance.