Large Language Models (LLMs) are vital for tasks in natural language processing but they encounter issues when it comes to deployment. This is due to their substantial computational and memory requirements during inference. Current research studies are focused on boosting LLM efficiency by applying methods such as quantization, pruning, distillation, and improved decoding. One of the significant techniques used here is sparsity, which reduces computation by eliminating zero elements and reducing I/O transfer between memory and computation units.

The obstacle with weight sparsity is that it grapples with GPU parallelization and accuracy loss. Activation sparsity also has its limitations as it needs full efficiency and needs more research on scaling laws in comparison to dense models.

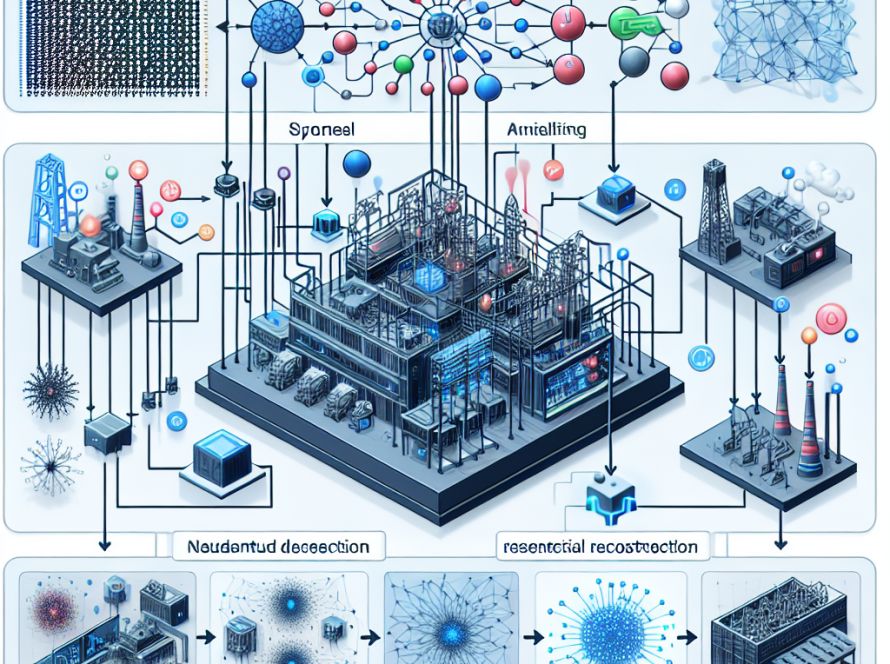

Recently, researchers from Microsoft and the University of Chinese Academy of Sciences have come up with an effective approach for training sparsely activated LLMs called Q-Sparse. This approach allows complete activation sparsity by applying top-K sparsification to activations and using a straight-through estimator during training, which significantly improves inference efficiency.

The Transformer architecture is greatly improved with Q-Sparse by allowing full sparsity in activations using top-K sparsification and the straight-through estimator. By applying a top-K function to the activations during matrix multiplication, this method cuts down on computational costs and memory usage. It can support full-precision and quantized models, including 1-bit models such as BitNet b1.58. Squared ReLU is used by Q-Sparse for feed-forward layers to enhance activation sparsity.

Research on LLM performance scaling with model size and training data following a power law has thrown up interesting findings for sparsely activated LLMs. They found that sparsely activated models’ performance parallels that of dense models when the sparsity ratio is fixed. Furthermore, the gap in performance between the sparse and dense models narrows as the model size increases.

Q-Sparse LLMs were tested in various training settings such as training from scratch, continue-training, and fine-tuning. In all the tests, Q-Sparse either matched or exceeded the performance of dense baselines, proving its efficacy and efficiency.

In conclusion, the integration of BitNet b1.58 with Q-Sparse serves to markedly enhance efficiency, particularly in inference. Future objectives for the researchers include scaling up training with more models and tokens and optimizing KV cache management through the integration of YOCO. Q-Sparse, which matches the performance of dense baselines, offers a crucial strategy for enhancing LLM efficiency and sustainability. It offers compatibility with full-precision and 1-bit models and provides significant efficiency gains across varied settings. Therefore, Q-Sparse could be a pivotal tool in the development of more effective and efficient LLMs.