Large language models (LLMs) have significantly advanced code generation, but they develop code in a linear fashion without access to a feedback loop that allows for corrections based on the previous outputs. This creates challenges in correcting mistakes or suggesting edits. Now, researchers at the University of California, Berkeley, have developed a new approach using neural diffusion models, which can interact directly with syntax trees – hierarchical representations of a code’s structure.

Several methods have tried to address these issues, including neural program synthesis, which combines neural networks with search methods to generate programs. These constructs incrementally explore possible program combinations, but this approach can be inefficient. On the other hand, neural diffusion models have demonstrated powerful results for generating high-dimensional data, including images, structured, and discrete data, such as molecules and graphs. Additionally, direct code editing with neural models has been applied using real-world code patches, yet these methods require substantial code edit datasets and have no inherent syntax validity.

The researchers’ novel approach iteratively refines programs while ensuring their syntax is valid. Their method enables the model to monitor the program’s output during each stage effectively, which is an essential addition to the debugging process. It also allows efficient exploration of the program space. Inspired by systems like AlphaZero, this hybrid approach has shown significant potential in search-based program synthesis.

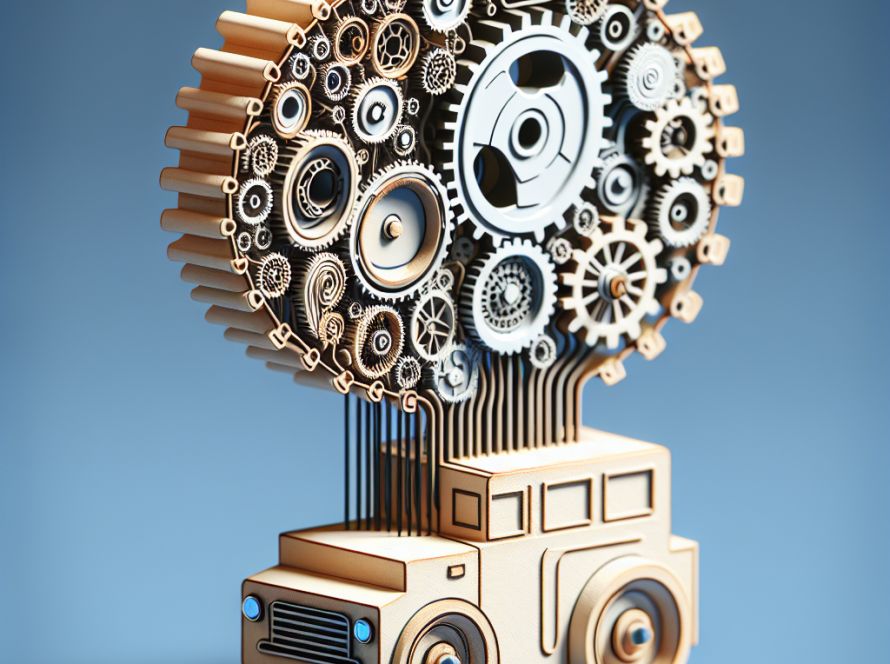

The fundamental concept of this technique is to develop denoising diffusion models, which are similar to image diffusion models. This approach uses context-free grammar (CFG), applying random mutations to programs while maintaining syntax validity. This noising process is then reversed by a neural network, which is trained to denoise programs. By training a value network in parallel, the denoising process can be directed towards programs likely to achieve the desired results.

The researchers’ method outperformed two existing methods (CSGNet and REPL Flow) on inverse graphics tasks. Throughout both domains, fewer renderer calls were needed to solve problems with the use of diffusion policy and beam search. Notably, the method was able to correct smaller issues which other models missed, and it could handle stochastic hand-drawn sketches.

The research marks a significant development in utilizing neural diffusion models to develop programs through iterative construction, execution, and editing. The outcome is a robust mechanism that offers more efficient exploration of program possibility-space and enables swift error correction. It outpaced incumbent methods in synthesizing complex programs, reshaping the capabilities of code development.