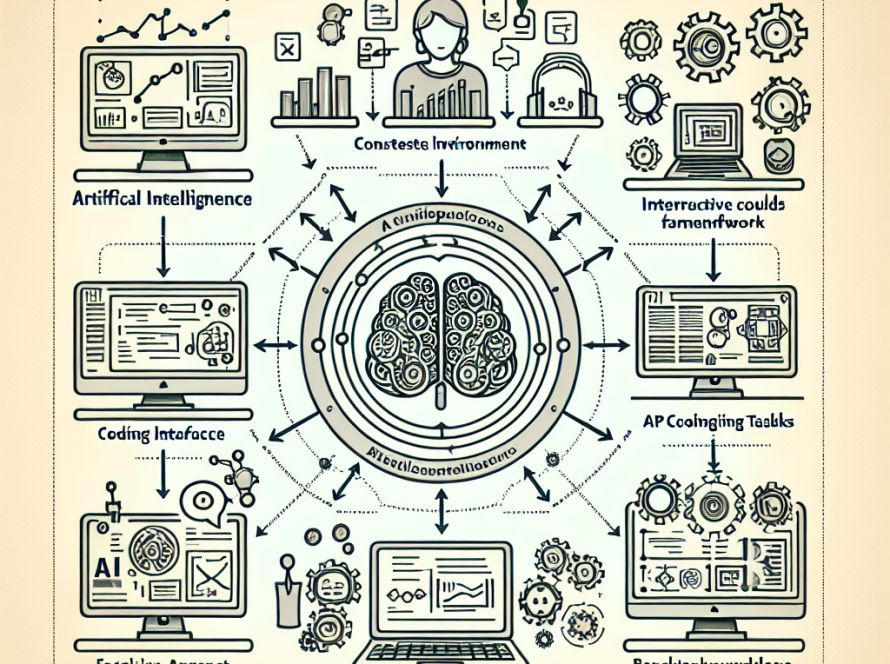

Large language models (LLMs) are used across different sectors such as technology, healthcare, finance, and education, and are instrumental in transforming stable workflows in these areas. An approach called Reinforcement Learning from Human Feedback (RLHF) is often applied to fine-tune these models. RLHF uses human feedback to tackle Reinforcement Learning (RL) issues such as simulated robotic locomotion and playing Atari games.

Despite their usefulness, state-of-the-art LLMs necessitate meticulous preparation to make them effective human assistants. This paper, contributed by researchers from multiple universities and institutions, focuses on understanding RLHF’s core components and the role of the reward function within RLHF. The researchers developed a theoretical oracular reward that sets a future standard for RLHF.

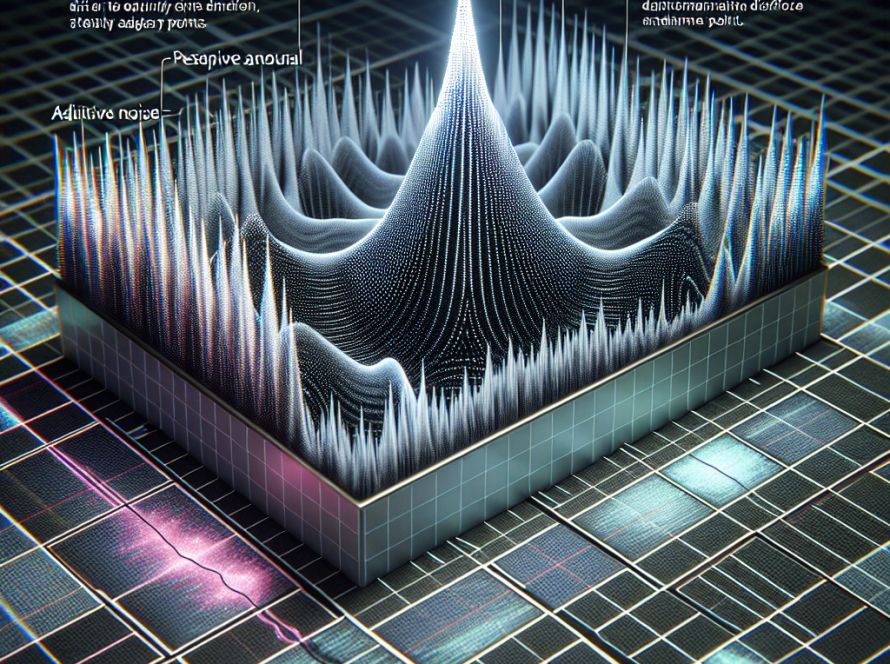

The purpose of reward learning in RLHF is to translate human feedback into an optimized reward function, thus building an aligned language model. The paper describes two methods used in reward learning: value-based methods, which calculate the value of states based on expected cumulative rewards, and policy-gradient methods, which train a parameterized policy using reward feedback.

The researchers integrated a trained reward model to finetune RLHF of language models, using Proximal Policy Optimization (PPO) and Advantage Actor-Critic (A2C) algorithms. Any training process includes a pre-trained language model that is prompted with contexts from a unique dataset.

The paper emphasizes a critical analysis of the reward models, highlights the impact of different implementation choices, and addresses the challenges faced while learning these reward functions. Potential limitations of the RLHF approach, types of feedback, algorithm variations, and alternative methods for model alignment are also discussed.

The success of this research is attributed to its researchers. More information about their work and additional findings can be found in their original research paper.