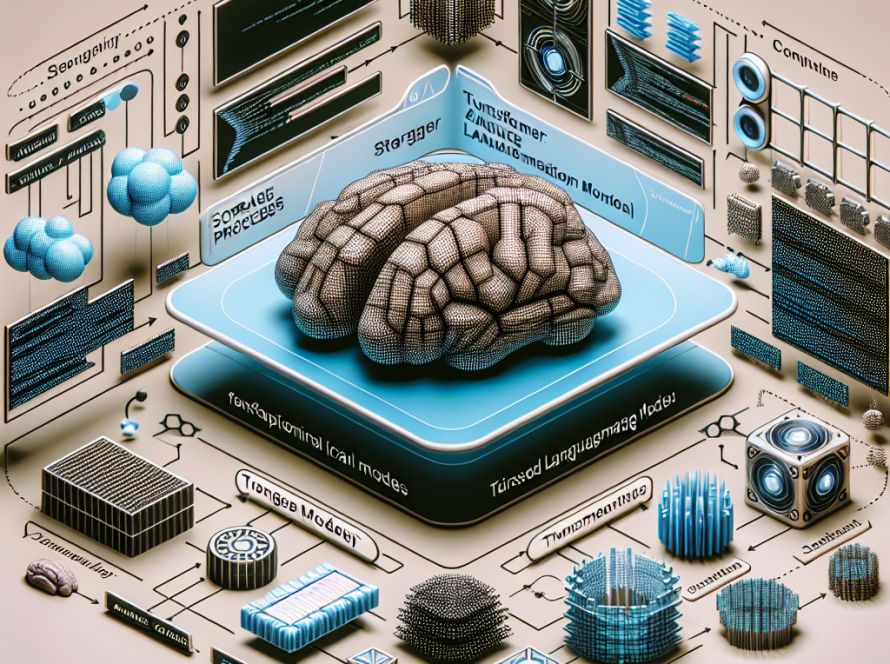

Large Language Models (LLMs) have drastically changed machine learning, pushing the field from traditional end-to-end training towards the use of pretrained models with carefully crafted prompts. This move has created a compelling question for researchers: Can a pretrained LLM function similar to a neural network, parameterized by its natural language prompt?

LLMs have been used for a variety of applications including planning, optimization, and multi-agent systems. This includes generating new solutions based on previous attempts for optimization problems, supervising visual representation learning, and creating zero-shot classification criteria for images. The technique of optimizing input prompts for LLMs in a discrete natural language space has given rise to new forms of automatic prompt optimization techniques.

However, much of this research has not fully explored the potential of LLMs as function approximators parameterized by natural language prompts. Recognizing this, researchers from the Max Planck Institute for Intelligent Systems, the University of Tübingen, and the University of Cambridge have introduced the Verbal Machine Learning (VML) framework. VML views LLMs as function approximators parameterized by text prompts, a change that brings attention to LLMs’ unique capabilities.

VML has several notable advantages over traditional machine learning strategies. Its strong interpretability, derived from its use of human-readable text prompts, represents a significant improvement over the often opaque nature of neural networks. Furthermore, the framework unites data and model parameters into a token-based format, acting as a streamlined process for handling various machine learning tasks.

VML has shown its effectiveness across a range of machine learning tasks, such as regression, classification, and image analysis. Results have shown VML outperforming traditional neural networks in both simple and complex tasks. Additionally, its strong interpretability has made the learning process easily understandable, allowing for the progression of learning to be monitored closely.

In classification tasks, VML has displayed impressive adaptability and interpretability. It has been successful in quickly learning decision boundaries for linearly separable data and incorporating prior knowledge to achieve accurate results in cases of non-linear data. Its performance in the domain of medical image classification also highlights its potential for real-world applications.

The results also exposed a few drawbacks. VML exhibited a large variance in training due to the fluctuating nature of language model inference. Numerical precision issues in language models can lead to fitting errors, even when the underlying symbolic expressions are correctly understood.

Despite these challenges, VML is a promising avenue for machine learning tasks, with its interpretability, flexibility, and ability to incorporate domain knowledge effectively. While there are some limitations and potential for improvement, VML adds to the exciting possibilities that LLMs are bringing to the world of machine learning.