Model-based reinforcement learning (MBRL) faces critical challenges, especially when dealing with imperfect dynamics models in complex environments. The inability to accurately predict models often results in suboptimal policy learning. The key is not only accurate predictions but also model adaptability in varied scenarios, which has necessitated innovation in MBRL methodologies.

MBRL research has seen the advent of different methods to address these inaccuracies. For instance, Plan to Predict (P2P) learns an uncertainty-forecasting model to avoid ambiguous regions during rollouts, while Branched and bidirectional rollouts leverage shorter horizons to reduce early-stage model errors, albeit at the expense of planning capabilities. An approach known as Model-Ensemble Exploration and Exploitation (MEEE) minimizes error impacts during rollouts by incorporating uncertainty in loss calculation, marking considerable progress in MBRL.

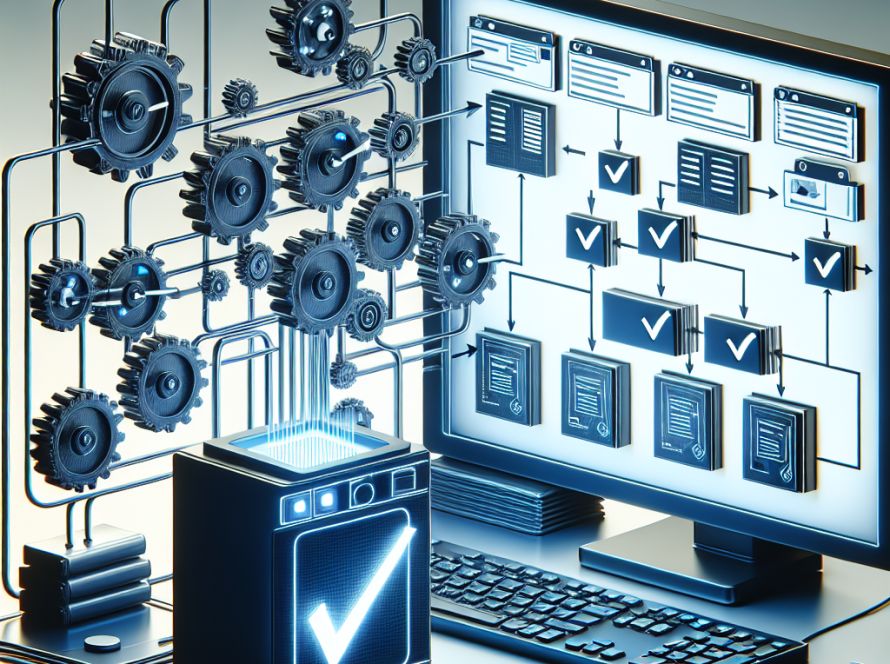

In a collaboration involving the University of Maryland, Tsinghua University, JPMorgan AI Research, and Shanghai Qi Zhi Institute, researchers have produced a novel MBRL approach called COPlanner. Principal to this is an uncertainty-aware policy-guided model predictive control (UP-MPC), which plays a critical role in estimating uncertainties and selecting suitable actions. A detailed ablation study on the Hopper-hop task was performed to evaluate different uncertainty estimation methodologies and their computational overheads.

Crucially, COPlanner offers a direct comparison to existing methods. The research provides useful visuals of trajectories from real-world scenarios, contrasting the performances of DreamerV3 and COPlanner-DreamerV3. It focuses on tasks like Hopper-hop and Quadruped-walk, illustrating COPlanner’s improvements over existing approaches. These visuals underscore COPlanner’s proficiency in handling tasks of varying complexity.

The research reveals that COPlanner significantly enhances sample efficiency and overall performance in proprioceptive and visual continuous control tasks. In complex visual tasks, the best outcomes are achieved via optimistic exploration and conservative rollouts. The research further analyzes model prediction error and rollout uncertainty as the environment step numbers increase.

The COPlanner framework signals an important development in MBRL. It pioneers the integration of conservative planning and optimistic exploration, thus confronting a fundamental MBRL challenge. This integration not only contributes to a deeper understanding of MBRL but also offers a practical solution for real-world applications, underlining its role in advancing MBRL.