AI organization Together AI has made a significant step in AI by introducing a Mixture of Agents (MoA) approach, Together MoA, which integrates the strengths of multiple large language models (LLMs) to boost quality and performance, setting new AI benchmarks.

MoA uses a layered design, with each level having several LLM agents. These agents use the outputs from the preceding layer as auxiliary information to form refined responses. This method lets MoA blend diverse capabilities and insights from various models into a more robust, versatile, combined model. The implementation of this approach has been successful, scoring an exceptional 65.1% on the AlpacaEval 2.0 benchmark and surpassing the former leader, GPT-4o, which scored 57.5%.

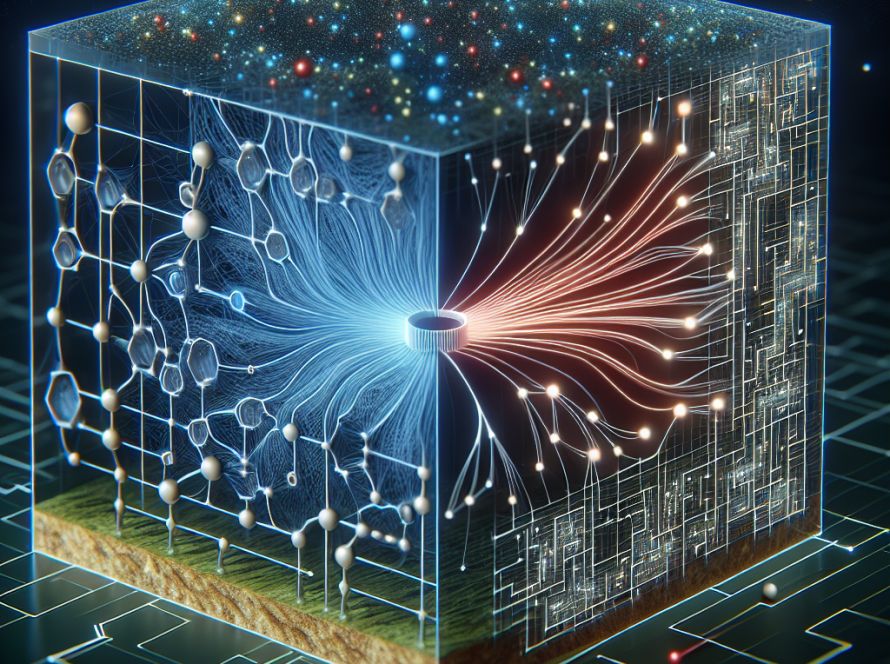

The development of MoA is driven by the concept of “collaborativeness” among LLMs, which states that an LLM generates better responses when given outputs from other models, even if those other models are less capable. Leveraging this concept, MoA’s design categorizes models into “proposers” and “aggregators.” Proposers generate initial reference responses, while aggregators merge these responses into high-quality outputs. This cyclical process is continued through several layers until a comprehensive, refined response is achieved.

The Together MoA framework has performed impressively in multiple benchmarks, including AlpacaEval 2.0, MT-Bench, and FLASK. It achieved top positions on the AlpacaEval 2.0 and MT-Bench leaderboards and an improvement margin of 7.6% on AlpacaEval 2.0, indicating its superior performance to closed-source alternatives.

Similarly, Together MoA is designed to be cost-effective. The research demonstrated that the Together MoA set-up provides the best balance, offering high-quality results at a reasonable cost. This is most evident in the Together MoA-Lite configuration, which matches GPT-4o in cost while delivering superior quality despite having fewer layers.

The success of MoA is due to collaborative efforts from several open-source AI player organizations, namely Meta AI, Mistral AI, Microsoft, Alibaba Cloud, and DataBricks. Their contribution to model development and testing played a big role in this achievement. Together AI intends to further optimize the MoA design exploring various model choices, prompts, and configurations, focusing on reducing latency time to the first token, and enhancing MoA’s capabilities in reasoning-focused tasks.

In conclusion, Together MoA showcases a significant advancement in capitalizing on the collective intelligence of open-source models. Its layered approach and collaborative ethos demonstrate the potential to enhance AI systems, making them more capable, robust, and congruent with human reasoning. The future of this groundbreaking technology and its continued evolution and application is eagerly anticipated by the AI community.