Complex Human Activity Recognition (CHAR) identifies the actions and behaviors of individuals in smart environments, but the process of labeling datasets with precise temporal information of atomic activities (basic human behaviors) is difficult and can lead to errors. Moreover, in real-world scenarios, accurate and detailed labeling is hard to obtain. Addressing this challenge is important for sectors like healthcare, elderly care, defense surveillance operations and emergency response.

Current CHAR methods usually demand detailed labeling of atomic activities within precise timeframes, which is a substantial ongoing task with potential for inaccuracy. Most datasets provide only a brief outline of the type of activities conducted within collection intervals, without any specific time or sequential labeling. This leads to increased complexity and possible errors in labeling.

Addressing these difficulties, research scientists at Rutgers University have proposed the Variance-Driven Complex Human Activity Recognition (VCHAR) framework. VCHAR employs a unique approach. Instead of demanding precise labels, it treats atomic activity outputs as distributions over certain periods. In other words, the VCHAR system uses predictions about the range of possible activities across different timeframes. This generative methodology makes understanding complex activity classifications easier, through video-based outputs, and is useful even for those who aren’t machine learning experts.

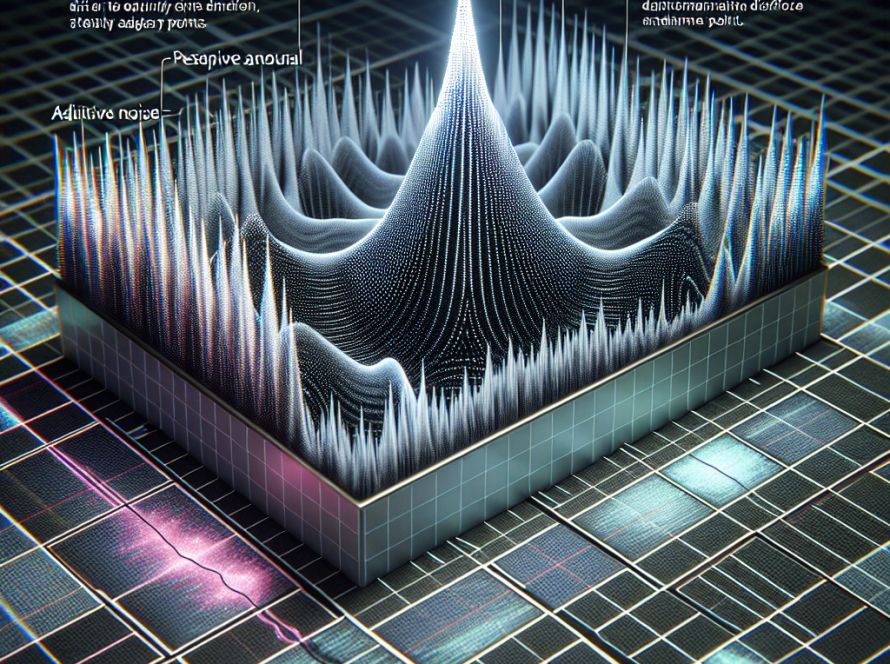

The VCHAR framework uses the Kullback-Leibler divergence process to judge the systems divergence from the truth. The precise knowledge of the adjustable states or unnecessary data is not a necessity in this method. This approach raises identification rates for complex activities in absence of precise atomic activity labels.

VCHAR also presents an innovative generative decoder framework that transforms sensor-based model outputs into comprehensive visual domain representations. These representations include visualizations of both complex and atomic activities and their associated sensor information. The framework uses Language Model (LM) and Vision-Language Model (VLM), tools that sort diverse data sources and generate comprehensive visual outputs.

The Rutgers team proposed a sub-model to modify the sensory information and a “one-shot tuning strategy” that helps in rapid adjustments specific to various scenarios. These adjustments allow the VCHAR system to adapt to different situations. Experimental testing on datasets revealed that VCHAR is competitive with traditional methods, but provides a superior user experience and explanation system.

Incorporating both Language Model (LM) and Vision-Language Model (VLM) into the framework merges assorted data sources into a complete coherent visual narrative displaying the identified activities and sensory information. This framework aids in improving the comprehensibility and trust in the outputs of the system. It also allows users without a technical background to understand the findings and promotes better communication of the results to stakeholders.

The VCHAR is an effective framework that confronts the challenges faced by CHAR. By eliminating the need for precise labeling, it provides a system that integrates straightforward, visual representations of intricate activities. VCHAR makes room for noteworthy accuracy in activity recognition, allowing any user, irrespective of the knowledge of machine learning, to comprehend the information and data precisely.

The system’s attribute of adaptability, due to in-built pre-training and one-shot tuning properties, makes it a promising solution for smart environment applications that demand accurate and contextually relevant activity recognition and description. VCHAR thus proves to be an instrumental tool in dealing with the challenges that CHAR puts forth.